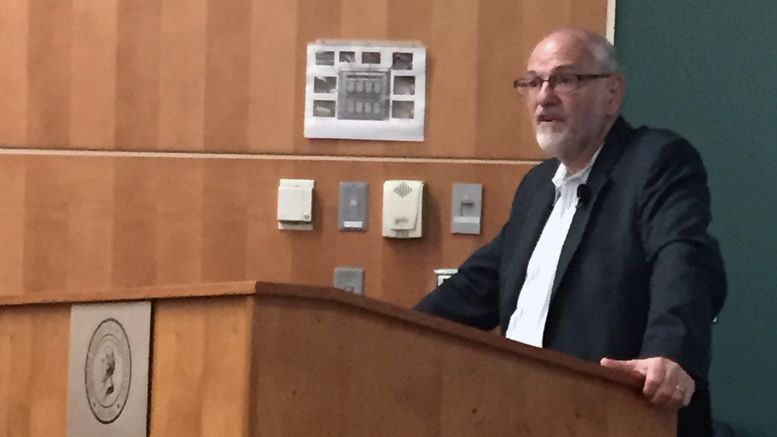

Ethicist and scholar Wendell Wallach presented his new book this past Wednesday, Dangerous Master: How To Keep Technology from Slipping Beyond Our Control. With science advancing at a rapid rate in fields such as human cloning, embryonic stem cell research, and artificially intelligent robotics, the fear of technology transitioning from “a good servant to a dangerous master” becomes all the more tangible. Will humanity reach a point where it can no longer control technology? Will technology cause more problems than solutions? Wallach opened his talk with controversial topics to demonstrate that the ethics of these issues need to be discussed. Wallach then stated his prediction for where technology is headed, gave three reasons that support his prediction, and provided suggestions of how his prophecy can be prevented.

Wallach stated that social disruptions, issues in public health, and economic crises will increase dramatically over the next 30 years due to technology. His reasons included the fact that humanity’s reliance on complex systems is increasing, the pace for discovery and innovation is too rapid, and that there are a plethora of things that can go wrong with advancing technologies. He pointed out that complex systems are unpredictable and difficult to manage, and that humanity is currently in a “tech-storm where continuing showers can be destructive.”

However, Wallach did not forebode humanity into what he called “hell in a hand basket” without proposing possible solutions. He stated that there are inflection points, or windows of opportunity, where change can be initiated. The trajectory of where technology is headed can be altered. Some possible future inflection points include technological unemployment, the fact that computers are taking jobs away from people, and “robotization” of warfare, the idea that robots will fight wars instead of humans.

Wallach strongly believes that robots should not have lethal autonomy, stating that it is mala in se, or evil in itself. He also stated at the talk that society should dismiss myths such as “TechnoSolutions,” a theory that believes that technology can solve any problem. He suggested that responsibility of failures in technology should be part of the design component of construction, and that ethicists and social theorists should be embedded in the innovative process of creating new technologies. He concluded that technological development needs to slow down at a humanely manageable pace, because it needs “oversight, foresight, and planning.”

Wallach’s talk then ended with an interactive discussion. “I never thought about these issues concerning the future of technology before,” said senior Matt Hall. “It really makes you wonder where we are headed.” Philosophy professor Gary Dobbins questioned Wallach on his interpretation of certain data in his presentation, arguing that “technology is not autonomous. We made this world the way it is.” Other members of the audience wondered whether technology or the people who made these technologies are to blame, to which Wallach responded, “There is no human and technology. We live in a sociotechnological system.” Wallach left his audience understanding that technology has the potential to influence humanity as much as humanity has the power to influence it.